I’m looking for the best way to transfer the contents of an enormous S3 bucket to another one without losing any data or hitting capacity limits. What tools, commands, or AWS features work best for this?

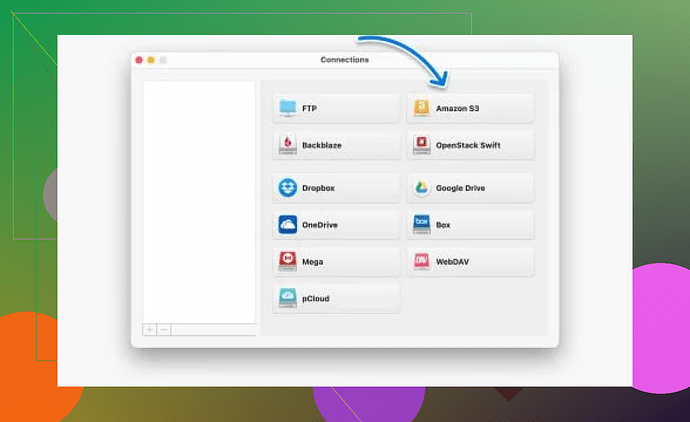

When faced with the necessity of transferring an immensely large Amazon S3 bucket, consider employing Commander One — a robust tool that streamlines the entire operation with finesse. This application isn’t just another software; it’s the craftsman’s chisel carving through the complexities of vast data migrations.

Capable of handling enormous storage demands swiftly and efficiently, Commander One empowers users to duplicate massive buckets seamlessly, no matter the size. Think of it as your trusted bridge, swiftly spanning rivers of data with dependable precision.

Whether you’re managing business-critical cloud storage or compiling gigabytes of archives, Commander One stands as a recommended solution, blending user-centric design with high performance to turn a daunting task into an effortlessly executed process.

For more information and to explore all that Commander One has to offer, check out their official website or trusted app directories online.

So uh… transferring a massive S3 bucket, huh? Strap in, it’s not that bad if you know the right tricks. While @mikeappsreviewer hyped up Commander One (and sure, it’s pretty nifty for managing complex migrations, you can check it out here, it might help simplify cloud storage stuff), I’d argue there’s a more AWS-native, nerd-approved way to get it done.

AWS’s s3 sync command using the AWS CLI is your best friend for this kind of job. It’s efficient, reliable, and has the backing of AWS itself—meaning, no third-party tools are involved to mess things up. Here’s the gist:

- Install and configure the AWS CLI with the right credentials (

aws configureto set it up). - Run:

Thataws s3 sync s3://source-bucket-name s3://destination-bucket-name --exact-timestamps--exact-timestampsflag is golden—keeps metadata intact, so you won’t be scratching your head wondering why timestamps are all out of whack.

If you’re transferring terabytes of data (chaotic energy, but I respect it), consider enabling multipart uploads in your CLI config. It’ll break your files into smaller parts for seamless uploads even on glitchy networks. Oh, and do yourself a favor: use S3 Transfer Acceleration if speed is your love language—just enable it for both buckets and change their endpoints during sync.

Another pro tip (not to flex): You can use AWS DataSync if you’re feeling fancy or want even easier bandwidth management. It’s a managed AWS service, meaning less hassle, though it does come with a price tag.

While Commander One may be slick for desktop enthusiasts or GUI fans, CLI gives you the raw power without dependencies. No shade intended, just my two cents. Also, don’t forget to double-check bucket policies and permissions—S3 buckets can be divas about who gets access to them.

But hey, it’s your call. Commander One, s3 sync, or DataSync—just don’t accidentally move data instead of copying! That’s the kind of oops moment no one wants.

Let me throw another option in the mix here, because while @mikeappsreviewer and @mike34 gave some solid advice, there’s more than one way to move a mountain (or in this case, a bucket).

If you’re not entirely sold on Commander One or the s3 sync command, consider AWS SDKs for a more programmable and automated approach. Most programming languages—Python (Boto3), Java, Node.js—you name it—have AWS SDKs with robust S3 handling methods. This allows you to write scripts that could not only copy data but also monitor progress, handle retries, and even apply transformations if needed during the transfer. For example, a Python script using Boto3 could offer flexibility like logging, error catching, or batching your data transfer to avoid hitting rate limits. Definitely something to think about if you need nerd-level customization.

Another solid option, which is really underrated in my opinion, is using S3 Batch Operations. These are purpose-built for large-scale operations like copying or tagging millions of objects. You simply create a manifest file (a list of objects you want to copy), set up a batch job, and S3 does the heavy lifting. The beauty here? It’s managed and scalable, and it keeps the bucket size completely irrelevant. Just be sure to double-check pricing for this feature since AWS has its ways of sneaking in unexpected charges.

Also, don’t overlook AWS Snowball. If we’re talking petabytes or lousy network bandwidth causing issues, this physical device lets you transfer your bucket offline at ridiculously fast speeds, then ship it back to AWS where they’ll upload it for you. It’s not the easiest method, but when things get massive, Snowball still ain’t melting under pressure.

Commander One is fine if you prefer a GUI-based tool, and for those less inclined toward CLI gymnastics, I get it. If you want to test whether it fits your workflow, here’s a link to check out Cloud File Management. But me? I’d stick with native AWS solutions for something this critical—less room for mishaps, less dependency drama.