I’m worried about my text being flagged by a ChatGPT detector even though I wrote it myself. Has anyone experienced false positives or knows how reliable these tools are? I need guidance on what steps to take so my work isn’t mistakenly identified as AI-generated.

Honestly, ChatGPT detectors are kinda all over the map with their accuracy. There’s real stories of people getting dinged with “AI-generated” labels for stuff they obviously sweated over themselves. It happens more often than you’d think, especially if your writing style is clear, formal, or sounds a bit generic—those detectors love to call that “AI-speak.” I had a friend basically rewrite the same paragraph 3 times before the detector stopped flagging it, so yeah, false positives are a thing.

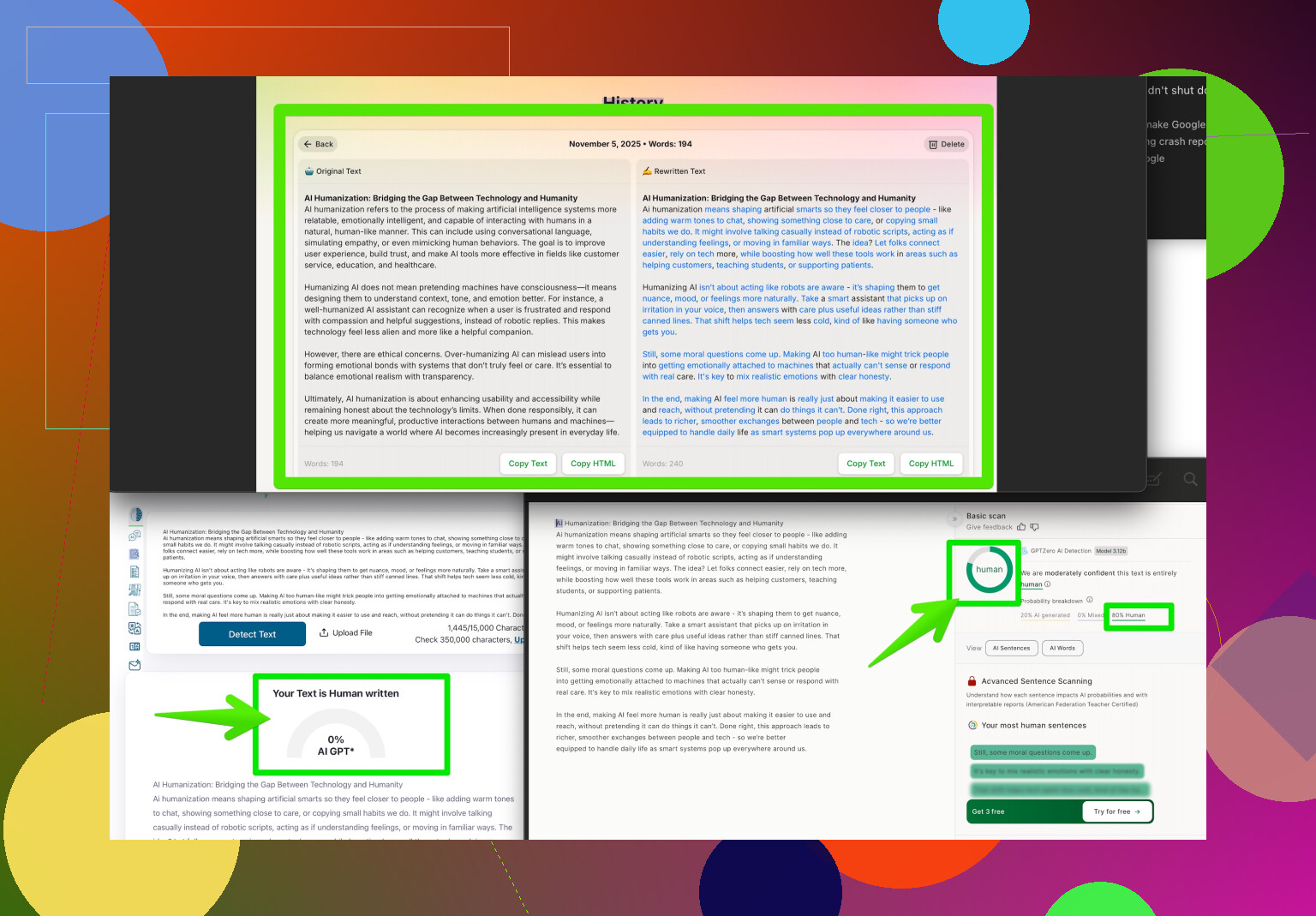

Best defense is to make your work sound as you as possible—throw in your quirks, personal stories, weird idioms, whatever feels less “robotic.” Double-checking with multiple detectors helps; don’t trust just one. If you want to be extra sure or need to pass those tests for school or publishing, tools like Clever AI Humanizer for natural human-like text help “humanize” your writing and dodge false spots.

But honestly? The tech behind those detectors just isn’t perfect yet. They’re useful for hints but not gospel. If you get flagged and you know it’s yours, just collect drafts, have some proof of process, and don’t sweat it too much. The worst thing you can do is panic or start second-guessing everything you write. Keep receipts and trust yourself.

Honestly, “accurate” is a stretch when we’re talking about ChatGPT detectors. They’re more like Weather Apps from 2009—you get an idea, but take it with a grain of salt. I’ve seen a college buddy’s research paper get flagged just because he likes writing in super clear, no-nonsense sentences. Not only was he peeved, but he had receipts—notes, voice memos, even that one sticky note covered in chicken scratch. Didn’t matter, the detector wanted to play judge and jury.

I get where @sonhadordobosque is coming from about tweaking your style, adding quirks, etc. That might help, but sometimes you just get unlucky, especially if you’re writing about popular topics or using generic phrasings (which, let’s be real, happens in almost any field). Using a tool like Clever AI Humanizer can be helpful when you’re running up against a big submission deadline and don’t have time for nervous rewrites. But, here’s my semi-controversial take: if a detector dings your work and you 100% know it’s legit, don’t jump through hoops or rewrite everything. Just document your process—keep drafts, emails, timestamps, whatever you have. If someone questions you, show your work. Most professors (or editors, or whatever authority is looming) would rather see proof of your effort than a zillion hyper-personalized metaphors.

For the ultra-paranoid, I’d say run your stuff through multiple detectors only to see if there’s consensus, but remember, those tools often disagree anyway. At the end of the day, these detectors are more like speed bumps than brick walls. Annoying? Sure. But they shouldn’t stop you from writing the way you want—or from defending your own work if push comes to shove.

And if you’d like to get more tips from actual folks dealing with “humanizing” their AI-detected text, check out these Reddit user strategies for making AI writing sound convincingly human. Lots of real talk without the doom-and-gloom.

This whole ChatGPT detector business is like trying to guess if a painting is real based on brushstrokes from a blurry photo. You guys already hit on how unpredictable and frustrating the false positives can be—especially if you’re the type to write clean, straightforward stuff (sorry, Hemingway fans). Honestly, in my own experience, I’ve found the detectors flip-flop on almost identical passages; patch in a weird metaphor or sprinkle some niche references, and suddenly, it’s “safe.” But that’s not a sustainable hack for everyone, especially when authenticity and clarity matter more than style.

Competing ideas from the other posts suggest beefing up your personal writing flavor or running drafts through multiple checkers. That works, but I’ll play devil’s advocate: constantly changing your style just to slip past poorly tuned algorithms can mess with your flow or even downgrade your argument’s impact. Good writing shouldn’t be about dodging bots.

So let’s talk about Clever AI Humanizer—the tool that keeps popping up in these threads. I tried using it on some bland technical writing to see what would happen. Pros? Fast results, less chance of detection, and it doesn’t butcher your original meaning. Cons? Sometimes, the “humanized” text ends up adding fluff or minor quirks that don’t sound like you. If your prof or boss knows your style well, that might raise new questions. Also, it’s not magic—human review still beats machine guesses hands-down.

Bottom line: detectors are flawed yardsticks. Use tools like Clever AI Humanizer to help if you’re in a pinch, but double back and check if it left artifacts. Most importantly, keep your drafts/digital receipts because if anything gets flagged, having a paper trail is often more convincing than any “humanizer” or detector. And don’t stress too hard about matching what the detectors want—write clearly, write honestly, and if push comes to shove, just show your receipts.